Update readme

This commit is contained in:

parent

a4336a39d6

commit

064eba00fd

104

README.md

104

README.md

|

|

@ -21,74 +21,64 @@ corresponding benchmarks are also added that demonstrate real performance boosts

|

||||||

feature set here will always be a ways behind the loom repo, but that this is an implementation

|

feature set here will always be a ways behind the loom repo, but that this is an implementation

|

||||||

you can take to the bank, literally.

|

you can take to the bank, literally.

|

||||||

|

|

||||||

Usage

|

Running the demo

|

||||||

===

|

===

|

||||||

|

|

||||||

Add the latest [silk package](https://crates.io/crates/silk) to the `[dependencies]` section

|

First, build the demo executables in release mode (optimized for performance):

|

||||||

of your Cargo.toml.

|

|

||||||

|

|

||||||

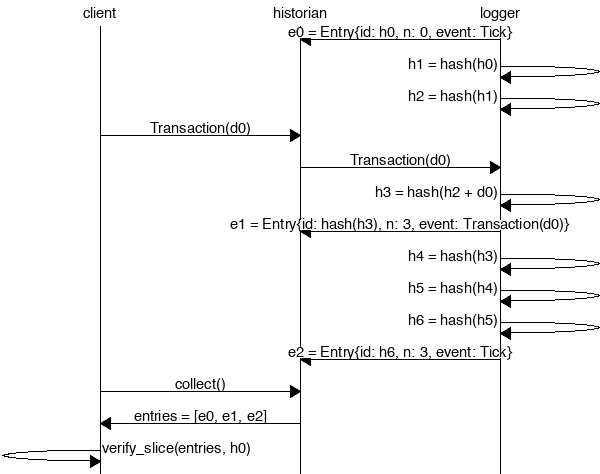

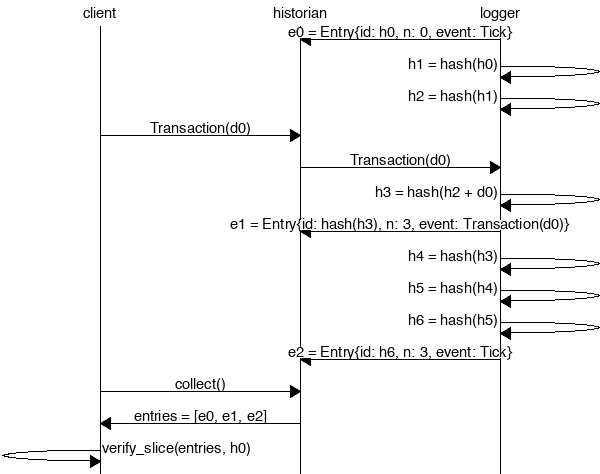

Create a *Historian* and send it *events* to generate an *event log*, where each log *entry*

|

```bash

|

||||||

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

$ cargo build --release

|

||||||

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

$ cd target/release

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

```rust

|

|

||||||

extern crate silk;

|

|

||||||

|

|

||||||

use silk::historian::Historian;

|

|

||||||

use silk::log::{verify_slice, Entry, Sha256Hash};

|

|

||||||

use silk::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

|

||||||

use std::thread::sleep;

|

|

||||||

use std::time::Duration;

|

|

||||||

use std::sync::mpsc::SendError;

|

|

||||||

|

|

||||||

fn create_log(hist: &Historian<Sha256Hash>) -> Result<(), SendError<Event<Sha256Hash>>> {

|

|

||||||

sleep(Duration::from_millis(15));

|

|

||||||

let data = Sha256Hash::default();

|

|

||||||

let keypair = generate_keypair();

|

|

||||||

let event0 = Event::new_claim(get_pubkey(&keypair), data, sign_claim_data(&data, &keypair));

|

|

||||||

hist.sender.send(event0)?;

|

|

||||||

sleep(Duration::from_millis(10));

|

|

||||||

Ok(())

|

|

||||||

}

|

|

||||||

|

|

||||||

fn main() {

|

|

||||||

let seed = Sha256Hash::default();

|

|

||||||

let hist = Historian::new(&seed, Some(10));

|

|

||||||

create_log(&hist).expect("send error");

|

|

||||||

drop(hist.sender);

|

|

||||||

let entries: Vec<Entry<Sha256Hash>> = hist.receiver.iter().collect();

|

|

||||||

for entry in &entries {

|

|

||||||

println!("{:?}", entry);

|

|

||||||

}

|

|

||||||

// Proof-of-History: Verify the historian learned about the events

|

|

||||||

// in the same order they appear in the vector.

|

|

||||||

assert!(verify_slice(&entries, &seed));

|

|

||||||

}

|

|

||||||

```

|

```

|

||||||

|

|

||||||

Running the program should produce a log similar to:

|

The testnode server is initialized with a transaction log from stdin and

|

||||||

|

generates a log on stdout. To create the input log, we'll need to create

|

||||||

|

a *genesis* configuration file and then generate a log from it. It's done

|

||||||

|

in two steps here because the demo-genesis.json file contains a private

|

||||||

|

key that will be used later in this demo.

|

||||||

|

|

||||||

```rust

|

```bash

|

||||||

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

$ ./silk-genesis-file-demo > demo-genesis.jsoc

|

||||||

Entry { num_hashes: 3, id: [67, ...], event: Transaction { data: [37, ...] } }

|

$ cat demo-genesis.json | ./silk-genesis-block > demo-genesis.log

|

||||||

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

|

||||||

```

|

```

|

||||||

|

|

||||||

Proof-of-History

|

Now you can start the server:

|

||||||

---

|

|

||||||

|

|

||||||

Take note of the last line:

|

```bash

|

||||||

|

$ cat demo-genesis.log | ./silk-testnode > demo-entries0.log

|

||||||

```rust

|

|

||||||

assert!(verify_slice(&entries, &seed));

|

|

||||||

```

|

```

|

||||||

|

|

||||||

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

Then, in a seperate shell, let's execute some transactions. Note we pass in

|

||||||

historian, we can verify that `id` is the result of applying a sha256 hash to the previous `id`

|

the JSON configuration file here, not the genesis log.

|

||||||

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

|

||||||

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

```bash

|

||||||

|

$ cat demo-genesis.json | ./silk-client-demo

|

||||||

|

```

|

||||||

|

|

||||||

|

Now kill the server with Ctrl-C and take a look at the transaction log. You should

|

||||||

|

see something similar to:

|

||||||

|

|

||||||

|

```json

|

||||||

|

{"num_hashes":27,"id":[0, ...],"event":"Tick"}

|

||||||

|

{"num_hashes:"3,"id":[67, ...],"event":{"Transaction":{"data":[37, ...]}}}

|

||||||

|

{"num_hashes":27,"id":[0, ...],"event":"Tick"}

|

||||||

|

```

|

||||||

|

|

||||||

|

Now restart the server from where we left off. Pass it both the genesis log and

|

||||||

|

the transaction log.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

$ cat demo-genesis.log demo-entries0.log | ./silk-testnode > demo-entries1.log

|

||||||

|

```

|

||||||

|

|

||||||

|

Lastly, run the client demo again and verify that all funds were spent in the

|

||||||

|

previous round and so no additional transactions are added.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

$ cat demo-genesis.json | ./silk-client-demo

|

||||||

|

```

|

||||||

|

|

||||||

|

Stop the server again and verify there are only Tick entries and no Transaction entries.

|

||||||

|

|

||||||

Developing

|

Developing

|

||||||

===

|

===

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,65 @@

|

||||||

|

The Historian

|

||||||

|

===

|

||||||

|

|

||||||

|

Create a *Historian* and send it *events* to generate an *event log*, where each log *entry*

|

||||||

|

is tagged with the historian's latest *hash*. Then ensure the order of events was not tampered

|

||||||

|

with by verifying each entry's hash can be generated from the hash in the previous entry:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

```rust

|

||||||

|

extern crate silk;

|

||||||

|

|

||||||

|

use silk::historian::Historian;

|

||||||

|

use silk::log::{verify_slice, Entry, Sha256Hash};

|

||||||

|

use silk::event::{generate_keypair, get_pubkey, sign_claim_data, Event};

|

||||||

|

use std::thread::sleep;

|

||||||

|

use std::time::Duration;

|

||||||

|

use std::sync::mpsc::SendError;

|

||||||

|

|

||||||

|

fn create_log(hist: &Historian<Sha256Hash>) -> Result<(), SendError<Event<Sha256Hash>>> {

|

||||||

|

sleep(Duration::from_millis(15));

|

||||||

|

let data = Sha256Hash::default();

|

||||||

|

let keypair = generate_keypair();

|

||||||

|

let event0 = Event::new_claim(get_pubkey(&keypair), data, sign_claim_data(&data, &keypair));

|

||||||

|

hist.sender.send(event0)?;

|

||||||

|

sleep(Duration::from_millis(10));

|

||||||

|

Ok(())

|

||||||

|

}

|

||||||

|

|

||||||

|

fn main() {

|

||||||

|

let seed = Sha256Hash::default();

|

||||||

|

let hist = Historian::new(&seed, Some(10));

|

||||||

|

create_log(&hist).expect("send error");

|

||||||

|

drop(hist.sender);

|

||||||

|

let entries: Vec<Entry<Sha256Hash>> = hist.receiver.iter().collect();

|

||||||

|

for entry in &entries {

|

||||||

|

println!("{:?}", entry);

|

||||||

|

}

|

||||||

|

// Proof-of-History: Verify the historian learned about the events

|

||||||

|

// in the same order they appear in the vector.

|

||||||

|

assert!(verify_slice(&entries, &seed));

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

Running the program should produce a log similar to:

|

||||||

|

|

||||||

|

```rust

|

||||||

|

Entry { num_hashes: 0, id: [0, ...], event: Tick }

|

||||||

|

Entry { num_hashes: 3, id: [67, ...], event: Transaction { data: [37, ...] } }

|

||||||

|

Entry { num_hashes: 3, id: [123, ...], event: Tick }

|

||||||

|

```

|

||||||

|

|

||||||

|

Proof-of-History

|

||||||

|

---

|

||||||

|

|

||||||

|

Take note of the last line:

|

||||||

|

|

||||||

|

```rust

|

||||||

|

assert!(verify_slice(&entries, &seed));

|

||||||

|

```

|

||||||

|

|

||||||

|

[It's a proof!](https://en.wikipedia.org/wiki/Curry–Howard_correspondence) For each entry returned by the

|

||||||

|

historian, we can verify that `id` is the result of applying a sha256 hash to the previous `id`

|

||||||

|

exactly `num_hashes` times, and then hashing then event data on top of that. Because the event data is

|

||||||

|

included in the hash, the events cannot be reordered without regenerating all the hashes.

|

||||||

Loading…

Reference in New Issue