Merge branch 'master' into zcash_init

This commit is contained in:

commit

c9132eb99d

|

|

@ -162,7 +162,7 @@ dependencies = [

|

|||

"bitcrypto 0.1.0",

|

||||

"heapsize 0.4.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"primitives 0.1.0",

|

||||

"rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serialization 0.1.0",

|

||||

"serialization_derive 0.1.0",

|

||||

]

|

||||

|

|

@ -229,12 +229,19 @@ dependencies = [

|

|||

|

||||

[[package]]

|

||||

name = "csv"

|

||||

version = "0.15.0"

|

||||

version = "1.0.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"byteorder 1.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"memchr 1.0.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"csv-core 0.1.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde 1.0.21 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "csv-core"

|

||||

version = "0.1.4"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"memchr 2.0.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

|

|

@ -537,7 +544,7 @@ dependencies = [

|

|||

"lazy_static 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"primitives 0.1.0",

|

||||

"rand 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

|

|

@ -619,6 +626,14 @@ dependencies = [

|

|||

"libc 0.2.33 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "memchr"

|

||||

version = "2.0.1"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

dependencies = [

|

||||

"libc 0.2.33 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "memoffset"

|

||||

version = "0.2.1"

|

||||

|

|

@ -720,6 +735,7 @@ dependencies = [

|

|||

"chain 0.1.0",

|

||||

"lazy_static 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"primitives 0.1.0",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serialization 0.1.0",

|

||||

]

|

||||

|

||||

|

|

@ -807,7 +823,7 @@ version = "0.1.0"

|

|||

dependencies = [

|

||||

"abstract-ns 0.3.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"bitcrypto 0.1.0",

|

||||

"csv 0.15.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"csv 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"futures 0.1.17 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"futures-cpupool 0.1.7 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"log 0.4.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

|

|

@ -840,7 +856,7 @@ dependencies = [

|

|||

"kernel32-sys 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"libc 0.2.33 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rand 0.3.22 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"smallvec 0.4.4 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"smallvec 0.4.5 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"winapi 0.2.8 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

|

|

@ -883,7 +899,7 @@ dependencies = [

|

|||

"bigint 1.0.5 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"byteorder 1.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"heapsize 0.4.1 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

|

|

@ -1025,7 +1041,7 @@ dependencies = [

|

|||

"network 0.1.0",

|

||||

"p2p 0.1.0",

|

||||

"primitives 0.1.0",

|

||||

"rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"script 0.1.0",

|

||||

"serde 1.0.21 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"serde_derive 1.0.21 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

|

|

@ -1050,6 +1066,11 @@ dependencies = [

|

|||

"time 0.1.38 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rustc-hex"

|

||||

version = "2.0.0"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

name = "rustc-serialize"

|

||||

version = "0.3.24"

|

||||

|

|

@ -1144,6 +1165,7 @@ version = "0.1.0"

|

|||

dependencies = [

|

||||

"byteorder 1.2.3 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

"primitives 0.1.0",

|

||||

"rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

|

|

@ -1195,7 +1217,7 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||

|

||||

[[package]]

|

||||

name = "smallvec"

|

||||

version = "0.4.4"

|

||||

version = "0.4.5"

|

||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||

|

||||

[[package]]

|

||||

|

|

@ -1546,7 +1568,8 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||

"checksum crossbeam-deque 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)" = "f739f8c5363aca78cfb059edf753d8f0d36908c348f3d8d1503f03d8b75d9cf3"

|

||||

"checksum crossbeam-epoch 0.3.1 (registry+https://github.com/rust-lang/crates.io-index)" = "927121f5407de9956180ff5e936fe3cf4324279280001cd56b669d28ee7e9150"

|

||||

"checksum crossbeam-utils 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)" = "2760899e32a1d58d5abb31129f8fae5de75220bc2176e77ff7c627ae45c918d9"

|

||||

"checksum csv 0.15.0 (registry+https://github.com/rust-lang/crates.io-index)" = "7ef22b37c7a51c564a365892c012dc0271221fdcc64c69b19ba4d6fa8bd96d9c"

|

||||

"checksum csv 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "71903184af9960c555e7f3b32ff17390d20ecaaf17d4f18c4a0993f2df8a49e3"

|

||||

"checksum csv-core 0.1.4 (registry+https://github.com/rust-lang/crates.io-index)" = "4dd8e6d86f7ba48b4276ef1317edc8cc36167546d8972feb4a2b5fec0b374105"

|

||||

"checksum display_derive 0.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "4bba5dcd6d2855639fcf65a9af7bbad0bfb6dbf6fe68fba70bab39a6eb973ef4"

|

||||

"checksum domain 0.2.2 (registry+https://github.com/rust-lang/crates.io-index)" = "c1850bf2c3c3349e1dba2aa214d86cf9edaa057a09ce46b1a02d5c07d5da5e65"

|

||||

"checksum dtoa 0.4.2 (registry+https://github.com/rust-lang/crates.io-index)" = "09c3753c3db574d215cba4ea76018483895d7bff25a31b49ba45db21c48e50ab"

|

||||

|

|

@ -1587,6 +1610,7 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||

"checksum log 0.4.1 (registry+https://github.com/rust-lang/crates.io-index)" = "89f010e843f2b1a31dbd316b3b8d443758bc634bed37aabade59c686d644e0a2"

|

||||

"checksum lru-cache 0.1.1 (registry+https://github.com/rust-lang/crates.io-index)" = "4d06ff7ff06f729ce5f4e227876cb88d10bc59cd4ae1e09fbb2bde15c850dc21"

|

||||

"checksum memchr 1.0.2 (registry+https://github.com/rust-lang/crates.io-index)" = "148fab2e51b4f1cfc66da2a7c32981d1d3c083a803978268bb11fe4b86925e7a"

|

||||

"checksum memchr 2.0.1 (registry+https://github.com/rust-lang/crates.io-index)" = "796fba70e76612589ed2ce7f45282f5af869e0fdd7cc6199fa1aa1f1d591ba9d"

|

||||

"checksum memoffset 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "0f9dc261e2b62d7a622bf416ea3c5245cdd5d9a7fcc428c0d06804dfce1775b3"

|

||||

"checksum mime 0.3.5 (registry+https://github.com/rust-lang/crates.io-index)" = "e2e00e17be181010a91dbfefb01660b17311059dc8c7f48b9017677721e732bd"

|

||||

"checksum mio 0.6.11 (registry+https://github.com/rust-lang/crates.io-index)" = "0e8411968194c7b139e9105bc4ae7db0bae232af087147e72f0616ebf5fdb9cb"

|

||||

|

|

@ -1622,6 +1646,7 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||

"checksum rocksdb 0.4.5 (git+https://github.com/ethcore/rust-rocksdb)" = "<none>"

|

||||

"checksum rocksdb-sys 0.3.0 (git+https://github.com/ethcore/rust-rocksdb)" = "<none>"

|

||||

"checksum rust-crypto 0.2.36 (registry+https://github.com/rust-lang/crates.io-index)" = "f76d05d3993fd5f4af9434e8e436db163a12a9d40e1a58a726f27a01dfd12a2a"

|

||||

"checksum rustc-hex 2.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "d2b03280c2813907a030785570c577fb27d3deec8da4c18566751ade94de0ace"

|

||||

"checksum rustc-serialize 0.3.24 (registry+https://github.com/rust-lang/crates.io-index)" = "dcf128d1287d2ea9d80910b5f1120d0b8eede3fbf1abe91c40d39ea7d51e6fda"

|

||||

"checksum rustc_version 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "b9743a7670d88d5d52950408ecdb7c71d8986251ab604d4689dd2ca25c9bca69"

|

||||

"checksum safemem 0.2.0 (registry+https://github.com/rust-lang/crates.io-index)" = "e27a8b19b835f7aea908818e871f5cc3a5a186550c30773be987e155e8163d8f"

|

||||

|

|

@ -1639,7 +1664,7 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||

"checksum slab 0.3.0 (registry+https://github.com/rust-lang/crates.io-index)" = "17b4fcaed89ab08ef143da37bc52adbcc04d4a69014f4c1208d6b51f0c47bc23"

|

||||

"checksum slab 0.4.0 (registry+https://github.com/rust-lang/crates.io-index)" = "fdeff4cd9ecff59ec7e3744cbca73dfe5ac35c2aedb2cfba8a1c715a18912e9d"

|

||||

"checksum smallvec 0.2.1 (registry+https://github.com/rust-lang/crates.io-index)" = "4c8cbcd6df1e117c2210e13ab5109635ad68a929fcbb8964dc965b76cb5ee013"

|

||||

"checksum smallvec 0.4.4 (registry+https://github.com/rust-lang/crates.io-index)" = "ee4f357e8cd37bf8822e1b964e96fd39e2cb5a0424f8aaa284ccaccc2162411c"

|

||||

"checksum smallvec 0.4.5 (registry+https://github.com/rust-lang/crates.io-index)" = "f90c5e5fe535e48807ab94fc611d323935f39d4660c52b26b96446a7b33aef10"

|

||||

"checksum stable_deref_trait 1.0.0 (registry+https://github.com/rust-lang/crates.io-index)" = "15132e0e364248108c5e2c02e3ab539be8d6f5d52a01ca9bbf27ed657316f02b"

|

||||

"checksum strsim 0.6.0 (registry+https://github.com/rust-lang/crates.io-index)" = "b4d15c810519a91cf877e7e36e63fe068815c678181439f2f29e2562147c3694"

|

||||

"checksum syn 0.11.11 (registry+https://github.com/rust-lang/crates.io-index)" = "d3b891b9015c88c576343b9b3e41c2c11a51c219ef067b264bd9c8aa9b441dad"

|

||||

|

|

|

|||

|

|

@ -37,9 +37,6 @@ debug = true

|

|||

[profile.test]

|

||||

debug = true

|

||||

|

||||

[profile.doc]

|

||||

debug = true

|

||||

|

||||

[[bin]]

|

||||

path = "pbtc/main.rs"

|

||||

name = "pbtc"

|

||||

|

|

|

|||

|

|

@ -212,6 +212,8 @@ SUBCOMMANDS:

|

|||

|

||||

## JSON-RPC

|

||||

|

||||

The JSON-RPC interface is served on port :8332 for mainnet and :18332 for testnet unless you specified otherwise. So if you are using testnet, you will need to change the port in the sample curl requests shown below.

|

||||

|

||||

#### Network

|

||||

|

||||

The Parity-bitcoin `network` interface.

|

||||

|

|

|

|||

|

|

@ -23,6 +23,7 @@ pub fn fetch(benchmark: &mut Benchmark) {

|

|||

let next_block = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(rolling_hash.clone()).nonce(x as u32).build()

|

||||

|

|

@ -65,6 +66,7 @@ pub fn write(benchmark: &mut Benchmark) {

|

|||

let next_block = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(rolling_hash.clone()).nonce(x as u32).build()

|

||||

|

|

@ -102,6 +104,7 @@ pub fn reorg_short(benchmark: &mut Benchmark) {

|

|||

let next_block = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(rolling_hash.clone()).nonce(x as u32 * 4).build()

|

||||

|

|

@ -112,6 +115,7 @@ pub fn reorg_short(benchmark: &mut Benchmark) {

|

|||

let next_block_side = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(base).nonce(x as u32 * 4 + 2).build()

|

||||

|

|

@ -122,6 +126,7 @@ pub fn reorg_short(benchmark: &mut Benchmark) {

|

|||

let next_block_side_continue = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(next_base).nonce(x as u32 * 4 + 3).build()

|

||||

|

|

@ -131,6 +136,7 @@ pub fn reorg_short(benchmark: &mut Benchmark) {

|

|||

let next_block_continue = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(rolling_hash.clone()).nonce(x as u32 * 4 + 1).build()

|

||||

|

|

@ -199,6 +205,7 @@ pub fn write_heavy(benchmark: &mut Benchmark) {

|

|||

let next_block = test_data::block_builder()

|

||||

.transaction()

|

||||

.coinbase()

|

||||

.lock_time(x as u32)

|

||||

.output().value(5000000000).build()

|

||||

.build()

|

||||

.merkled_header().parent(rolling_hash.clone()).nonce(x as u32).build()

|

||||

|

|

|

|||

|

|

@ -34,6 +34,7 @@ pub fn main(benchmark: &mut Benchmark) {

|

|||

LittleEndian::write_u64(&mut coinbase_nonce[..], x as u64);

|

||||

let next_block = test_data::block_builder()

|

||||

.transaction()

|

||||

.lock_time(x as u32)

|

||||

.input()

|

||||

.coinbase()

|

||||

.signature_bytes(coinbase_nonce.to_vec().into())

|

||||

|

|

@ -62,6 +63,7 @@ pub fn main(benchmark: &mut Benchmark) {

|

|||

LittleEndian::write_u64(&mut coinbase_nonce[..], (b + BLOCKS_INITIAL) as u64);

|

||||

let mut builder = test_data::block_builder()

|

||||

.transaction()

|

||||

.lock_time(b as u32)

|

||||

.input().coinbase().signature_bytes(coinbase_nonce.to_vec().into()).build()

|

||||

.output().value(5000000000).build()

|

||||

.build();

|

||||

|

|

|

|||

|

|

@ -4,7 +4,7 @@ version = "0.1.0"

|

|||

authors = ["debris <marek.kotewicz@gmail.com>"]

|

||||

|

||||

[dependencies]

|

||||

rustc-serialize = "0.3"

|

||||

rustc-hex = "2"

|

||||

heapsize = "0.4"

|

||||

bitcrypto = { path = "../crypto" }

|

||||

primitives = { path = "../primitives" }

|

||||

|

|

|

|||

|

|

@ -0,0 +1,286 @@

|

|||

# Chain

|

||||

|

||||

In this crate, you will find the structures and functions that make up the blockchain, Bitcoin's core data structure.

|

||||

|

||||

## Conceptual Overview

|

||||

Here we will dive deep into how the blockchain is created, organized, etc. as a preface for understanding the code in this crate.

|

||||

|

||||

We will cover the following concepts:

|

||||

* Blockchain

|

||||

* Block

|

||||

* Block Header

|

||||

* Merkle Tree

|

||||

* Transaction

|

||||

* Witnesses and SegWit

|

||||

* Coinbase

|

||||

|

||||

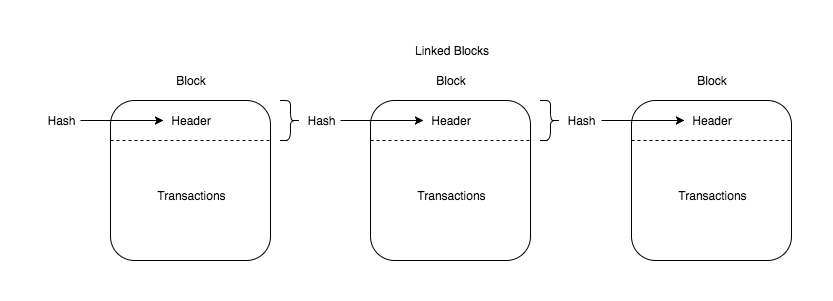

### Blockchain

|

||||

So what is a blockchain? A blockchain is a *chain* of *blocks*...

|

||||

|

||||

|

||||

|

||||

Yep, actually.

|

||||

|

||||

### Block

|

||||

The real question is, what is a [block](https://github.com/bitcoinbook/bitcoinbook/blob/develop/ch09.asciidoc#structure-of-a-block)?

|

||||

|

||||

A block is a data structure with two fields:

|

||||

* **Block header:** a data structure containing the block's metadata

|

||||

* **Transactions:** an array ([vector](https://doc.rust-lang.org/book/second-edition/ch08-01-vectors.html) in rust) of transactions

|

||||

|

||||

|

||||

|

||||

### Block Header

|

||||

So what is a [block header](https://github.com/bitcoinbook/bitcoinbook/blob/develop/ch09.asciidoc#block-header)?

|

||||

|

||||

A block header is a data structure with the following fields:

|

||||

* **Version:** indicates which set of block validation rules to follow

|

||||

* **Previous Header Hash:** a reference to the parent/previous block in the blockchain

|

||||

* **Merkle Root Hash:** a hash (root hash) of the merkle tree data structure containing a block's transactions

|

||||

* **Time:** a timestamp (seconds from Unix Epoch)

|

||||

* **Bits:** aka the difficulty target for this block

|

||||

* **Nonce:** value used in proof-of-work

|

||||

|

||||

|

||||

|

||||

*How are blocks chained together?* They are chained together via the backwards reference (previous header hash) present in the block header. Each block points backwards to its parent, all the way back to the [genesis block](https://github.com/bitcoinbook/bitcoinbook/blob/develop/ch09.asciidoc#the-genesis-block) (the first block in the Bitcoin blockchain that is hard coded into all clients).

|

||||

|

||||

### Merkle Root

|

||||

*What is a Merkle Root?* A merkle root is the root of a merkle tree. As best stated in *Mastering Bitcoin*:

|

||||

|

||||

> A _merkle tree_, also known as a _binary hash tree_, is a data

|

||||

> structure used for efficiently summarizing and verifying the integrity of large sets of data.

|

||||

|

||||

In a merkle tree, all the data, in this case transactions, are leaves in the tree. Each of these is hashed and concatenated with its sibling... all the way up the tree until you are left with a single *root* hash (the merkle root hash).

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Transaction

|

||||

According to [Mastering Bitcoin](https://github.com/bitcoinbook/bitcoinbook/) :

|

||||

|

||||

> Transactions are the most important part of the bitcoin system. Everything else in bitcoin is designed to ensure that transactions can created, propagated on the network, validated, and finally added to the global ledger of transactions (the blockchain).

|

||||

|

||||

At its most basic level, a transaction is an encoded data structure that facilitates the transfer of value between two public key addresses on the Bitcoin blockchain.

|

||||

|

||||

The most fundamental building block of a transaction is a `transaction output` -- the bitcoin you own in your "wallet" is in fact a subset of `unspent transaction outputs` or `UTXO's` of the global `UTXO set`. `UTXOs` are indivisible, discrete units of value which can only be consumed in their entirety. Thus, if I want to send you 1 BTC and I only own one `UTXO` worth 2 BTC, I would construct a transaction that spends my `UTXO` and sends 1 BTC to you and 1 BTC back to me (just like receiving change).

|

||||

|

||||

**Transaction Output:** transaction outputs have two fields:

|

||||

* *value*: the value of a transaction

|

||||

* *scriptPubKey (aka locking script or witness script)*: conditions required to unlock (spend) a transaction value

|

||||

|

||||

**Transaction Input:** transaction inputs have four fields:

|

||||

* *previous output*: the previous output transaction reference, as an OutPoint structure (see below)

|

||||

* *scriptSig*: a script satisfying the conditions set on the UTXO ([BIP16](https://github.com/bitcoin/bips/blob/master/bip-0016.mediawiki))

|

||||

* *scriptWitness*: a script satisfying the conditions set on the UTXO ([BIP141](https://github.com/bitcoin/bips/blob/master/bip-0141.mediawiki))

|

||||

* *sequence number*: transaction version as defined by the sender. Intended for "replacement" of transactions when information is updated before inclusion into a block.

|

||||

|

||||

**Outpoint**:

|

||||

* *hash*: references the transaction that contains the UTXO being spent

|

||||

* *index*: identifies which UTXO from that transaction is referenced

|

||||

|

||||

**Transaction Version:** the version of the data formatting

|

||||

|

||||

**Transaction Locktime:** this specifies either a block number or a unix time at which this transaction is valid

|

||||

|

||||

**Transaction Fee:** A transaction's input value must equal the transaction's output value or else the transaction is invalid. The difference between these two values is the transaction fee, a fee paid to the miner who includes this transaction in his/her block.

|

||||

|

||||

### Witnesses and SegWit

|

||||

|

||||

**Preface**: here I will try to give the minimal context surrounding segwit as is necessary to understand why witnesses exist in terms of blocks, block headers, and transactions.

|

||||

|

||||

SegWit is defined in [BIP141](https://github.com/bitcoin/bips/blob/master/bip-0141.mediawiki).

|

||||

|

||||

A witness is defined as:

|

||||

> The witness is a serialization of all witness data of the transaction.

|

||||

|

||||

Most importantly:

|

||||

> Witness data is NOT script.

|

||||

|

||||

Thus:

|

||||

> A non-witness program (defined hereinafter) txin MUST be associated with an empty witness field, represented by a 0x00. If all txins are not witness program, a transaction's wtxid is equal to its txid.

|

||||

|

||||

*Regular Transaction Id vs. Witness Transaction Id*

|

||||

|

||||

* Regular transaction id:

|

||||

```[nVersion][txins][txouts][nLockTime]```

|

||||

* Witness transaction id:

|

||||

```[nVersion][marker][flag][txins][txouts][witness][nLockTime]```

|

||||

|

||||

A `witness root hash` is calculated with all those `wtxid` as leaves, in a way similar to the `hashMerkleRoot` in the block header.

|

||||

|

||||

In the transaction, there are two different script fields:

|

||||

* **script_sig**: original/old signature script ([BIP16](https://github.com/bitcoin/bips/blob/master/bip-0016.mediawiki)/P2SH)

|

||||

* **script_witness**: witness script

|

||||

|

||||

Depending on the content of these two fields and the scriptPubKey, witness validation logic may be triggered. Here are the two cases (note these definitions are straight from the BIP so may be quite dense):

|

||||

|

||||

1. **Native witness program**: *a scriptPubKey that is exactly a push of a version byte, plus a push of a witness program. The scriptSig must be exactly empty or validation fails.*

|

||||

|

||||

2. **P2SH witness program**: *a scriptPubKey is a P2SH script, and the BIP16 redeemScript pushed in the scriptSig is exactly a push of a version byte plus a push of a witness program. The scriptSig must be exactly a push of the BIP16 redeemScript or validation fails.*

|

||||

|

||||

[Here](https://github.com/bitcoin/bips/blob/master/bip-0141.mediawiki#witness-program) are the nitty gritty details of how witnesses and scripts work together -- this goes into the fine details of how the above situations are implemented.

|

||||

|

||||

Here are a couple StackOverflow Questions/Answers that help clarify some of the above information:

|

||||

|

||||

* [What's the purpose of ScriptSig in a SegWit transaction?](https://bitcoin.stackexchange.com/questions/49372/whats-the-purpose-of-scriptsig-in-a-segwit-transaction)

|

||||

* [Can old wallets redeem segwit outputs it receives? If so how?](https://bitcoin.stackexchange.com/questions/50254/can-old-wallets-redeem-segwit-outputs-it-receives-if-so-how?rq=1)

|

||||

|

||||

|

||||

### Coinbase

|

||||

Whenever a miner mines a block, it includes a special transaction called a coinbase transaction. This transaction has no inputs and creates X bitcoins equal to the current block reward (at this time 12.5) which are awarded to the miner of the block. Read more about the coinbase transaction [here](https://github.com/bitcoinbook/bitcoinbook/blob/f8b883dcd4e3d1b9adf40fed59b7e898fbd9241f/ch10.asciidoc#the-coinbase-transaction).

|

||||

|

||||

**Need a more visual demonstration of the above information? Check out [this awesome website](https://anders.com/blockchain/).**

|

||||

|

||||

## Crate Dependencies

|

||||

#### 1. [rustc-hex](https://crates.io/crates/rustc-hex):

|

||||

*Serialization and deserialization support from hexadecimal strings.*

|

||||

|

||||

**One thing to note**: *This crate is deprecated in favor of [`serde`](https://serde.rs/). No new feature development will happen in this crate, although bug fixes proposed through PRs will still be merged. It is very highly recommended by the Rust Library Team that you use [`serde`](https://serde.rs/), not this crate.*

|

||||

|

||||

#### 2. [heapsize](https://crates.io/crates/heapsize):

|

||||

*infrastructure for measuring the total runtime size of an object on the heap*

|

||||

|

||||

#### 3. Crates from within the Parity Bitcoin Repo:

|

||||

* bitcrypto (crypto)

|

||||

* primitives

|

||||

* serialization

|

||||

* serialization_derive

|

||||

|

||||

## Crate Content

|

||||

|

||||

### Block (block.rs)

|

||||

A relatively straight forward implementation of the data structure described above. A `block` is a rust `struct`. It implements the following traits:

|

||||

* ```From<&'static str>```: this trait takes in a string and outputs a `block`. It is implemented via the `from` function which deserializes the received string into a `block` data structure. Read more about serialization [here](https://github.com/bitcoinbook/bitcoinbook/blob/develop/ch06.asciidoc#transaction-serializationoutputs) (in the context of transactions).

|

||||

* ```RepresentH256```: this trait takes a `block` data structure and hashes it, returning the hash.

|

||||

|

||||

The `block` has a few methods of its own. The entirety of these are simple getter methods.

|

||||

|

||||

### Block Header (block_header.rs)

|

||||

A relatively straight forward implementation of the data structure described above. A `block header` is a rust `struct`. It implements the following traits:

|

||||

* ```From<&'static str>```: this trait takes in a string and outputs a `block`. It is implemented via the `from` function which deserializes the received string into a `block` data structure. Read more about serialization [here](https://github.com/bitcoinbook/bitcoinbook/blob/develop/ch06.asciidoc#transaction-serializationoutputs) (in the context of transactions).

|

||||

* `fmt::Debug`: this trait formats the `block header` struct for pretty printing the debug context -- ie it allows the programmer to print out the context of the struct in a way that makes it easier to debug. Once this trait is implemented, you can do:

|

||||

```rust

|

||||

println!("{:?}", some_block_header);

|

||||

```

|

||||

Which will print out:

|

||||

```

|

||||

Block Header {

|

||||

version: VERSION_VALUE,

|

||||

previous_header_hash: PREVIOUS_HASH_HEADER_VALUE,

|

||||

merkle_root_hash: MERKLE_ROOT_HASH_VALUE,

|

||||

time: TIME_VALUE,

|

||||

bits: BITS_VALUE,

|

||||

nonce: NONCE_VALUE,

|

||||

}

|

||||

```

|

||||

The `block header` only has a single method of its own, the `hash` method that returns a hash of itself.

|

||||

|

||||

### Constants (constants.rs)

|

||||

There are a few constants included in this crate. Since these are nicely documented, documenting them here would be redundant. [Here](https://doc.rust-lang.org/rust-by-example/custom_types/constants.html) you can read more about constants in rust.

|

||||

|

||||

### Read and Hash (read_and_hash.rs)

|

||||

This is a small file that deals with the reading and hashing of serialized data, utilizing a few nifty rust features.

|

||||

|

||||

First, a `HashedData` struct is defined over a generic T. Generics in rust work in a similar way to generics in other languages. If you need to brush up on generics, [read here](https://doc.rust-lang.org/1.8.0/book/generics.html). This data structure stores the data for a hashed value along with the size (length of the hash in bytes) and the original hash.

|

||||

|

||||

Next the `ReadAndHash` trait is defined. Traits in rust define abstract behaviors that can be shared between many different types. For example, let's say I am writing some code about food. To do this, I might want to create an `Eatable` trait that has a method `eat` describing how to eat this food (borrowing an example from the [New Rustacean podcast](https://newrustacean.com/)). To do this, I would define the trait as follows:

|

||||

```rust

|

||||

pub trait Eatable {

|

||||

fn eat(&self) -> String;

|

||||

}

|

||||

```

|

||||

Here I have defined a trait along with a method signature that must be implemented by any type that implements this trait. For example, let's say I define a candy type that is eatable:

|

||||

```rust

|

||||

struct Candy {

|

||||

flavor: String,

|

||||

}

|

||||

|

||||

impl Eatable for Candy {

|

||||

fn eat(&self) -> String {

|

||||

format!("Unwrap candy and munch on that {} goodness.", &self.flavor)

|

||||

}

|

||||

}

|

||||

|

||||

// Create candy and eat it

|

||||

let candy = Candy { flavor: chocolate };

|

||||

prinln!("{}", candy.eat()); // "Unwrap candy and munch on that chocolate goodness."

|

||||

```

|

||||

|

||||

Now let's take this one step further. Let's say we want to recreate Eatable so that the eat function returns a `Compost` type with generic T where presumably T is some type that is `Compostable` (another trait). Now here, it is important that we only return `Compostable` types because only `Compostable` foods can be made into Compost. Thus, we can recreate the `Eatable` trait, this time limiting what types can implement it to those that also implement the `Compostable` trait using the where keyword (note this is called a bounded trait):

|

||||

```rust

|

||||

pub trait Eatable {

|

||||

fn eat<T>(&self) -> Compost<T> where T: Compostable;

|

||||

}

|

||||

|

||||

pub trait Compostable {} // Here Compostable is a marker trait

|

||||

|

||||

struct Compost<T> {

|

||||

compostable_food: T,

|

||||

}

|

||||

|

||||

impl<T> Compost<t> {

|

||||

fn celebrate() {

|

||||

println!("Thank you for saving the earth!");

|

||||

}

|

||||

}

|

||||

```

|

||||

So, let's now redefine `Candy`:

|

||||

```rust

|

||||

struct Candy {

|

||||

flavor: String,

|

||||

}

|

||||

|

||||

impl Compostable for Candy {}

|

||||

|

||||

|

||||

impl Eatable for Candy {

|

||||

fn eat<T>(&self) -> Compost<T> where T: Compostable{

|

||||

Compost { compostable_food: format("A {} candy", &self.flavor) }

|

||||

}

|

||||

}

|

||||

|

||||

// Create candy and eat it

|

||||

let candy = Candy { flavor: chocolate };

|

||||

let compost = candy.eat();

|

||||

compost.celebrate(); // "Thank you for saving the earth!"

|

||||

```

|

||||

|

||||

If this example doesn't quite make sense, I recommend checking out the [traits chapter](https://doc.rust-lang.org/book/second-edition/ch10-02-traits.html) in the Rust Book.

|

||||

|

||||

Now that you understand traits, generics, and bounded traits, let's get back to `ReadAndHash`. This is a trait that implements a `read_and_hash<T>` method where T is `Deserializable`, hence it can be deserialized (which as you might guess is important since the input here is a serialized string). The output of this method is a Result (unfamiliar with Results in rust... [read more here](https://doc.rust-lang.org/std/result/)) returning the `HashedData` type described above.

|

||||

|

||||

Finally, the `ReadAndHash` trait is implemented for the `Reader` type. You can read more about the `Reader` type in the serialization crate.

|

||||

|

||||

### Transaction (transaction.rs)

|

||||

As described above, there are four structs related to transactions defined in this file:

|

||||

* OutPoint

|

||||

* TransactionInput

|

||||

* TransactionOutput

|

||||

* Transaction

|

||||

|

||||

The implementations of these are pretty straight forward -- a majority of the defined methods are getters and each of these structs implements the `Serializable` and `Deserializable` traits.

|

||||

|

||||

A few things to note:

|

||||

* The `HeapSizeOf` trait is implemented for `TransactionInput`, `TransactionOutput`, and `Transaction`. It has the method `heap_size_of_children` which calculates and returns the heap sizes of various struct fields.

|

||||

* The `total_spends` method on `Transaction` calculates the sum of all the outputs in a transaction.

|

||||

|

||||

|

||||

### Merkle Root (merkle_root.rs)

|

||||

The main function in this file is the function that calculates the merkle root (a filed on the block header struct). This function has two helper functions:

|

||||

* **concat**: takes two values and returns the concatenation of the two hashed values (512 bit)

|

||||

* **merkle_root_hash**: hashes the 512 bit hash of two concatenated values

|

||||

|

||||

Using these two functions, the merkle root function takes a vector of values and calculates the merkle root row-by-row (a row being the level of a binary tree). Note, if there is an uneven number of values in the vector, the last value will be duplicated to create a full tree.

|

||||

|

||||

### Indexed

|

||||

There are indexed equivalents of `block`, `block header`, and `transaction`:

|

||||

* indexed_block.rs

|

||||

* indexed_header.rs

|

||||

* indexed_transaction.rs

|

||||

|

||||

These are essentially wrappers around the "raw" data structures with the following:

|

||||

* methods to convert to and from the raw data structures (i.e. block <-> indexed_block)

|

||||

* an equivalence method to compare equality against other indexed structures (specifically the PartialEq trait)

|

||||

* a deserialize method

|

||||

|

|

@ -13,7 +13,7 @@ pub struct Block {

|

|||

|

||||

impl From<&'static str> for Block {

|

||||

fn from(s: &'static str) -> Self {

|

||||

deserialize(&s.from_hex().unwrap() as &[u8]).unwrap()

|

||||

deserialize(&s.from_hex::<Vec<u8>>().unwrap() as &[u8]).unwrap()

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -1,6 +1,6 @@

|

|||

use std::io;

|

||||

use std::fmt;

|

||||

use hex::FromHex;

|

||||

use hex::{ToHex, FromHex};

|

||||

use ser::{deserialize, serialize};

|

||||

use crypto::dhash256;

|

||||

use compact::Compact;

|

||||

|

|

@ -66,8 +66,6 @@ impl BlockHeader {

|

|||

|

||||

impl fmt::Debug for BlockHeader {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

use rustc_serialize::hex::ToHex;

|

||||

|

||||

f.debug_struct("BlockHeader")

|

||||

.field("version", &self.version)

|

||||

.field("previous_header_hash", &self.previous_header_hash.reversed())

|

||||

|

|

@ -76,14 +74,14 @@ impl fmt::Debug for BlockHeader {

|

|||

.field("time", &self.time)

|

||||

.field("bits", &self.bits)

|

||||

.field("nonce", &self.nonce)

|

||||

.field("equihash_solution", &self.equihash_solution.as_ref().map(|s| s.0.to_hex()))

|

||||

.field("equihash_solution", &self.equihash_solution.as_ref().map(|s| s.0.to_hex::<String>()))

|

||||

.finish()

|

||||

}

|

||||

}

|

||||

|

||||

impl From<&'static str> for BlockHeader {

|

||||

fn from(s: &'static str) -> Self {

|

||||

deserialize(&s.from_hex().unwrap() as &[u8]).unwrap()

|

||||

deserialize(&s.from_hex::<Vec<u8>>().unwrap() as &[u8]).unwrap()

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -84,7 +84,7 @@ impl IndexedBlock {

|

|||

|

||||

impl From<&'static str> for IndexedBlock {

|

||||

fn from(s: &'static str) -> Self {

|

||||

deserialize(&s.from_hex().unwrap() as &[u8]).unwrap()

|

||||

deserialize(&s.from_hex::<Vec<u8>>().unwrap() as &[u8]).unwrap()

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

use std::io;

|

||||

use rustc_serialize::hex::ToHex;

|

||||

use hex::{ToHex, FromHex};

|

||||

use hash::{H256, H512};

|

||||

use ser::{Error, Serializable, Deserializable, Stream, Reader, FixedArray_H256_2,

|

||||

FixedArray_u8_296, FixedArray_u8_601_2};

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

extern crate rustc_serialize;

|

||||

extern crate rustc_hex as hex;

|

||||

extern crate heapsize;

|

||||

extern crate primitives;

|

||||

extern crate bitcrypto as crypto;

|

||||

|

|

@ -24,7 +24,6 @@ pub trait RepresentH256 {

|

|||

fn h256(&self) -> hash::H256;

|

||||

}

|

||||

|

||||

pub use rustc_serialize::hex;

|

||||

pub use primitives::{hash, bytes, bigint, compact};

|

||||

|

||||

pub use block::Block;

|

||||

|

|

|

|||

|

|

@ -102,7 +102,7 @@ pub struct Transaction {

|

|||

|

||||

impl From<&'static str> for Transaction {

|

||||

fn from(s: &'static str) -> Self {

|

||||

deserialize(&s.from_hex().unwrap() as &[u8]).unwrap()

|

||||

deserialize(&s.from_hex::<Vec<u8>>().unwrap() as &[u8]).unwrap()

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -5,7 +5,7 @@ authors = ["debris <marek.kotewicz@gmail.com>"]

|

|||

|

||||

[dependencies]

|

||||

rand = "0.4"

|

||||

rustc-serialize = "0.3"

|

||||

rustc-hex = "2"

|

||||

lazy_static = "1.0"

|

||||

base58 = "0.1"

|

||||

eth-secp256k1 = { git = "https://github.com/ethcore/rust-secp256k1" }

|

||||

|

|

|

|||

|

|

@ -1,7 +1,7 @@

|

|||

//! Bitcoin keys.

|

||||

|

||||

extern crate rand;

|

||||

extern crate rustc_serialize;

|

||||

extern crate rustc_hex as hex;

|

||||

#[macro_use]

|

||||

extern crate lazy_static;

|

||||

extern crate base58;

|

||||

|

|

@ -19,7 +19,6 @@ mod private;

|

|||

mod public;

|

||||

mod signature;

|

||||

|

||||

pub use rustc_serialize::hex;

|

||||

pub use primitives::{hash, bytes};

|

||||

|

||||

pub use address::{Type, Address};

|

||||

|

|

|

|||

|

|

@ -108,7 +108,7 @@ impl DisplayLayout for Private {

|

|||

impl fmt::Debug for Private {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

try!(writeln!(f, "network: {:?}", self.network));

|

||||

try!(writeln!(f, "secret: {}", self.secret.to_hex()));

|

||||

try!(writeln!(f, "secret: {}", self.secret.to_hex::<String>()));

|

||||

writeln!(f, "compressed: {}", self.compressed)

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -92,14 +92,14 @@ impl PartialEq for Public {

|

|||

impl fmt::Debug for Public {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

match *self {

|

||||

Public::Normal(ref hash) => writeln!(f, "normal: {}", hash.to_hex()),

|

||||

Public::Compressed(ref hash) => writeln!(f, "compressed: {}", hash.to_hex()),

|

||||

Public::Normal(ref hash) => writeln!(f, "normal: {}", hash.to_hex::<String>()),

|

||||

Public::Compressed(ref hash) => writeln!(f, "compressed: {}", hash.to_hex::<String>()),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl fmt::Display for Public {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

self.to_hex().fmt(f)

|

||||

self.to_hex::<String>().fmt(f)

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -12,13 +12,13 @@ pub struct Signature(Vec<u8>);

|

|||

|

||||

impl fmt::Debug for Signature {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

self.0.to_hex().fmt(f)

|

||||

self.0.to_hex::<String>().fmt(f)

|

||||

}

|

||||

}

|

||||

|

||||

impl fmt::Display for Signature {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

self.0.to_hex().fmt(f)

|

||||

self.0.to_hex::<String>().fmt(f)

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -74,13 +74,13 @@ pub struct CompactSignature(H520);

|

|||

|

||||

impl fmt::Debug for CompactSignature {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

f.write_str(&self.0.to_hex())

|

||||

f.write_str(&self.0.to_hex::<String>())

|

||||

}

|

||||

}

|

||||

|

||||

impl fmt::Display for CompactSignature {

|

||||

fn fmt(&self, f: &mut fmt::Formatter) -> fmt::Result {

|

||||

f.write_str(&self.0.to_hex())

|

||||

f.write_str(&self.0.to_hex::<String>())

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -64,12 +64,26 @@ impl InventoryVector {

|

|||

}

|

||||

}

|

||||

|

||||

pub fn witness_tx(hash: H256) -> Self {

|

||||

InventoryVector {

|

||||

inv_type: InventoryType::MessageWitnessTx,

|

||||

hash: hash,

|

||||

}

|

||||

}

|

||||

|

||||

pub fn block(hash: H256) -> Self {

|

||||

InventoryVector {

|

||||

inv_type: InventoryType::MessageBlock,

|

||||

hash: hash,

|

||||

}

|

||||

}

|

||||

|

||||

pub fn witness_block(hash: H256) -> Self {

|

||||

InventoryVector {

|

||||

inv_type: InventoryType::MessageWitnessBlock,

|

||||

hash: hash,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Serializable for InventoryVector {

|

||||

|

|

|

|||

|

|

@ -3,9 +3,9 @@ use primitives::hash::H256;

|

|||

use primitives::compact::Compact;

|

||||

use chain::{OutPoint, TransactionOutput, IndexedTransaction};

|

||||

use storage::{SharedStore, TransactionOutputProvider};

|

||||

use network::ConsensusParams;

|

||||

use network::{ConsensusParams, ConsensusFork, TransactionOrdering};

|

||||

use memory_pool::{MemoryPool, OrderingStrategy, Entry};

|

||||

use verification::{work_required, block_reward_satoshi, transaction_sigops};

|

||||

use verification::{work_required, block_reward_satoshi, transaction_sigops, median_timestamp_inclusive};

|

||||

|

||||

const BLOCK_VERSION: u32 = 0x20000000;

|

||||

const BLOCK_HEADER_SIZE: u32 = 4 + 32 + 32 + 4 + 4 + 4;

|

||||

|

|

@ -116,7 +116,9 @@ impl SizePolicy {

|

|||

|

||||

/// Block assembler

|

||||

pub struct BlockAssembler {

|

||||

/// Maximal block size.

|

||||

pub max_block_size: u32,

|

||||

/// Maximal # of sigops in the block.

|

||||

pub max_block_sigops: u32,

|

||||

}

|

||||

|

||||

|

|

@ -130,6 +132,8 @@ struct FittingTransactionsIterator<'a, T> {

|

|||

block_height: u32,

|

||||

/// New block time

|

||||

block_time: u32,

|

||||

/// Are OP_CHECKDATASIG && OP_CHECKDATASIGVERIFY enabled for this block.

|

||||

checkdatasig_active: bool,

|

||||

/// Size policy decides if transactions size fits the block

|

||||

block_size: SizePolicy,

|

||||

/// Sigops policy decides if transactions sigops fits the block

|

||||

|

|

@ -143,12 +147,21 @@ struct FittingTransactionsIterator<'a, T> {

|

|||

}

|

||||

|

||||

impl<'a, T> FittingTransactionsIterator<'a, T> where T: Iterator<Item = &'a Entry> {

|

||||

fn new(store: &'a TransactionOutputProvider, iter: T, max_block_size: u32, max_block_sigops: u32, block_height: u32, block_time: u32) -> Self {

|

||||

fn new(

|

||||

store: &'a TransactionOutputProvider,

|

||||

iter: T,

|

||||

max_block_size: u32,

|

||||

max_block_sigops: u32,

|

||||

block_height: u32,

|

||||

block_time: u32,

|

||||

checkdatasig_active: bool,

|

||||

) -> Self {

|

||||

FittingTransactionsIterator {

|

||||

store: store,

|

||||

iter: iter,

|

||||

block_height: block_height,

|

||||

block_time: block_time,

|

||||

checkdatasig_active,

|

||||

// reserve some space for header and transations len field

|

||||

block_size: SizePolicy::new(BLOCK_HEADER_SIZE + 4, max_block_size, 1_000, 50),

|

||||

sigops: SizePolicy::new(0, max_block_sigops, 8, 50),

|

||||

|

|

@ -190,7 +203,7 @@ impl<'a, T> Iterator for FittingTransactionsIterator<'a, T> where T: Iterator<It

|

|||

|

||||

let transaction_size = entry.size as u32;

|

||||

let bip16_active = true;

|

||||

let sigops_count = transaction_sigops(&entry.transaction, self, bip16_active) as u32;

|

||||

let sigops_count = transaction_sigops(&entry.transaction, self, bip16_active, self.checkdatasig_active) as u32;

|

||||

|

||||

let size_step = self.block_size.decide(transaction_size);

|

||||

let sigops_step = self.sigops.decide(sigops_count);

|

||||

|

|

@ -233,7 +246,7 @@ impl<'a, T> Iterator for FittingTransactionsIterator<'a, T> where T: Iterator<It

|

|||

}

|

||||

|

||||

impl BlockAssembler {

|

||||

pub fn create_new_block(&self, store: &SharedStore, mempool: &MemoryPool, time: u32, consensus: &ConsensusParams) -> BlockTemplate {

|

||||

pub fn create_new_block(&self, store: &SharedStore, mempool: &MemoryPool, time: u32, median_timestamp: u32, consensus: &ConsensusParams) -> BlockTemplate {

|

||||

// get best block

|

||||

// take it's hash && height

|

||||

let best_block = store.best_block();

|

||||

|

|

@ -242,11 +255,23 @@ impl BlockAssembler {

|

|||

let bits = work_required(previous_header_hash.clone(), time, height, store.as_block_header_provider(), consensus);

|

||||

let version = BLOCK_VERSION;

|

||||

|

||||

let checkdatasig_active = match consensus.fork {

|

||||

ConsensusFork::BitcoinCash(ref fork) => median_timestamp >= fork.magnetic_anomaly_time,

|

||||

_ => false

|

||||

};

|

||||

|

||||

let mut coinbase_value = block_reward_satoshi(height);

|

||||

let mut transactions = Vec::new();

|

||||

|

||||

let mempool_iter = mempool.iter(OrderingStrategy::ByTransactionScore);

|

||||

let tx_iter = FittingTransactionsIterator::new(store.as_transaction_output_provider(), mempool_iter, self.max_block_size, self.max_block_sigops, height, time);

|

||||

let tx_iter = FittingTransactionsIterator::new(

|

||||

store.as_transaction_output_provider(),

|

||||

mempool_iter,

|

||||

self.max_block_size,

|

||||

self.max_block_sigops,

|

||||

height,

|

||||

time,

|

||||

checkdatasig_active);

|

||||

for entry in tx_iter {

|

||||

// miner_fee is i64, but we can safely cast it to u64

|

||||

// memory pool should restrict miner fee to be positive

|

||||

|

|

@ -255,6 +280,15 @@ impl BlockAssembler {

|

|||

transactions.push(tx);

|

||||

}

|

||||

|

||||

// sort block transactions

|

||||

let median_time_past = median_timestamp_inclusive(previous_header_hash.clone(), store.as_block_header_provider());

|

||||

match consensus.fork.transaction_ordering(median_time_past) {

|

||||

TransactionOrdering::Canonical => transactions.sort_unstable_by(|tx1, tx2|

|

||||

tx1.hash.cmp(&tx2.hash)),

|

||||

// memory pool iter returns transactions in topological order

|

||||

TransactionOrdering::Topological => (),

|

||||

}

|

||||

|

||||

BlockTemplate {

|

||||

version: version,

|

||||

previous_header_hash: previous_header_hash,

|

||||

|

|

@ -271,7 +305,16 @@ impl BlockAssembler {

|

|||

|

||||

#[cfg(test)]

|

||||

mod tests {

|

||||

use super::{SizePolicy, NextStep};

|

||||

extern crate test_data;

|

||||

|

||||

use std::sync::Arc;

|

||||

use db::BlockChainDatabase;

|

||||

use primitives::hash::H256;

|

||||

use storage::SharedStore;

|

||||

use network::{ConsensusParams, ConsensusFork, Network, BitcoinCashConsensusParams};

|

||||

use memory_pool::MemoryPool;

|

||||

use self::test_data::{ChainBuilder, TransactionBuilder};

|

||||

use super::{BlockAssembler, SizePolicy, NextStep, BlockTemplate};

|

||||

|

||||

#[test]

|

||||

fn test_size_policy() {

|

||||

|

|

@ -317,4 +360,41 @@ mod tests {

|

|||

fn test_fitting_transactions_iterator_locked_transaction() {

|

||||

// TODO

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn block_assembler_transaction_order() {

|

||||

fn construct_block(consensus: ConsensusParams) -> (BlockTemplate, H256, H256) {

|

||||

let chain = &mut ChainBuilder::new();

|

||||

TransactionBuilder::with_default_input(0).set_output(30).store(chain) // transaction0

|

||||

.into_input(0).set_output(50).store(chain); // transaction0 -> transaction1

|

||||

let hash0 = chain.at(0).hash();

|

||||

let hash1 = chain.at(1).hash();

|

||||

|

||||

let mut pool = MemoryPool::new();

|

||||

let storage: SharedStore = Arc::new(BlockChainDatabase::init_test_chain(vec![test_data::genesis().into()]));

|

||||

pool.insert_verified(chain.at(0).into());

|

||||

pool.insert_verified(chain.at(1).into());

|

||||

|

||||

(BlockAssembler {

|

||||

max_block_size: 0xffffffff,

|

||||

max_block_sigops: 0xffffffff,

|

||||

}.create_new_block(&storage, &pool, 0, 0, &consensus), hash0, hash1)

|

||||

}

|

||||

|

||||

// when topological consensus is used

|

||||

let topological_consensus = ConsensusParams::new(Network::Mainnet, ConsensusFork::BitcoinCore);

|

||||

let (block, hash0, hash1) = construct_block(topological_consensus);

|

||||

assert!(hash1 < hash0);

|

||||

assert_eq!(block.transactions[0].hash, hash0);

|

||||

assert_eq!(block.transactions[1].hash, hash1);

|

||||

|

||||

// when canonocal consensus is used

|

||||

let mut canonical_fork = BitcoinCashConsensusParams::new(Network::Mainnet);

|

||||

canonical_fork.magnetic_anomaly_time = 0;

|

||||

let canonical_consensus = ConsensusParams::new(Network::Mainnet, ConsensusFork::BitcoinCash(canonical_fork));

|

||||

let (block, hash0, hash1) = construct_block(canonical_consensus);

|

||||

assert!(hash1 < hash0);

|

||||

assert_eq!(block.transactions[0].hash, hash1);

|

||||

assert_eq!(block.transactions[1].hash, hash0);

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -8,3 +8,4 @@ lazy_static = "1.0"

|

|||

chain = { path = "../chain" }

|

||||

primitives = { path = "../primitives" }

|

||||

serialization = { path = "../serialization" }

|

||||

rustc-hex = "2"

|

||||

|

|

|

|||

|

|

@ -41,6 +41,9 @@ pub struct BitcoinCashConsensusParams {

|

|||

/// Time of monolith (aka May 2018) hardfork.

|

||||

/// https://github.com/bitcoincashorg/spec/blob/4fbb0face661e293bcfafe1a2a4744dcca62e50d/may-2018-hardfork.md

|

||||

pub monolith_time: u32,

|

||||

/// Time of magnetic anomaly (aka Nov 2018) hardfork.

|

||||

/// https://github.com/bitcoincashorg/bitcoincash.org/blob/f92f5412f2ed60273c229f68dd8703b6d5d09617/spec/2018-nov-upgrade.md

|

||||

pub magnetic_anomaly_time: u32,

|

||||

}

|

||||

|

||||

#[derive(Debug, Clone)]

|

||||

|

|

@ -68,6 +71,17 @@ pub enum ConsensusFork {

|

|||

ZCash(ZCashConsensusParams),

|

||||

}

|

||||

|

||||

#[derive(Debug, Clone, Copy)]

|

||||

/// Describes the ordering of transactions within single block.

|

||||

pub enum TransactionOrdering {

|

||||

/// Topological tranasaction ordering: if tx TX2 depends on tx TX1,

|

||||

/// it should come AFTER TX1 (not necessary **right** after it).

|

||||

Topological,

|

||||

/// Canonical transaction ordering: transactions are ordered by their

|

||||

/// hash (in ascending order).

|

||||

Canonical,

|

||||

}

|

||||

|

||||

impl ConsensusParams {

|

||||

pub fn new(network: Network, fork: ConsensusFork) -> Self {

|

||||

match network {

|

||||

|

|

@ -199,6 +213,15 @@ impl ConsensusParams {

|

|||

(height == 91842 && hash == &H256::from_reversed_str("00000000000a4d0a398161ffc163c503763b1f4360639393e0e4c8e300e0caec")) ||

|

||||

(height == 91880 && hash == &H256::from_reversed_str("00000000000743f190a18c5577a3c2d2a1f610ae9601ac046a38084ccb7cd721"))

|

||||

}

|

||||

|

||||

/// Returns true if SegWit is possible on this chain.

|

||||

pub fn is_segwit_possible(&self) -> bool {

|

||||

match self.fork {

|

||||

// SegWit is not supported in (our?) regtests

|

||||

ConsensusFork::BitcoinCore if self.network != Network::Regtest => true,

|

||||

ConsensusFork::BitcoinCore | ConsensusFork::BitcoinCash(_) | ConsensusFork::ZCash(_) => false,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl ConsensusFork {

|

||||

|

|

@ -225,6 +248,13 @@ impl ConsensusFork {

|

|||

}

|

||||

}

|

||||

|

||||

pub fn min_transaction_size(&self, median_time_past: u32) -> usize {

|

||||

match *self {

|

||||

ConsensusFork::BitcoinCash(ref fork) if median_time_past >= fork.magnetic_anomaly_time => 100,

|

||||

_ => 0,

|

||||

}

|

||||

}

|

||||

|

||||

pub fn max_transaction_size(&self) -> usize {

|

||||

// BitcoinCash: according to REQ-5: max size of tx is still 1_000_000

|

||||

// SegWit: size * 4 <= 4_000_000 ===> max size of tx is still 1_000_000

|

||||

|

|

@ -275,6 +305,14 @@ impl ConsensusFork {

|

|||

unreachable!("BitcoinCash has no SegWit; weight is only checked with SegWit activated; qed"),

|

||||

}

|

||||

}

|

||||

|

||||

pub fn transaction_ordering(&self, median_time_past: u32) -> TransactionOrdering {

|

||||

match *self {

|

||||

ConsensusFork::BitcoinCash(ref fork) if median_time_past >= fork.magnetic_anomaly_time

|

||||

=> TransactionOrdering::Canonical,

|

||||

_ => TransactionOrdering::Topological,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl BitcoinCashConsensusParams {

|

||||

|

|

@ -284,16 +322,19 @@ impl BitcoinCashConsensusParams {

|

|||

height: 478559,

|

||||

difficulty_adjustion_height: 504031,

|

||||

monolith_time: 1526400000,

|

||||

magnetic_anomaly_time: 1542300000,

|

||||

},

|

||||

Network::Testnet => BitcoinCashConsensusParams {

|

||||

height: 1155876,

|

||||

difficulty_adjustion_height: 1188697,

|

||||

monolith_time: 1526400000,

|

||||

magnetic_anomaly_time: 1542300000,

|

||||

},

|

||||

Network::Regtest | Network::Unitest => BitcoinCashConsensusParams {

|

||||

height: 0,

|

||||

difficulty_adjustion_height: 0,

|

||||

monolith_time: 1526400000,

|

||||

magnetic_anomaly_time: 1542300000,

|

||||

},

|

||||

}

|

||||

}

|

||||

|

|

@ -390,6 +431,14 @@ mod tests {

|

|||

assert_eq!(ConsensusFork::BitcoinCash(BitcoinCashConsensusParams::new(Network::Mainnet)).max_transaction_size(), 1_000_000);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_consensus_fork_min_transaction_size() {

|

||||

assert_eq!(ConsensusFork::BitcoinCore.min_transaction_size(0), 0);

|

||||

assert_eq!(ConsensusFork::BitcoinCore.min_transaction_size(2000000000), 0);

|

||||

assert_eq!(ConsensusFork::BitcoinCash(BitcoinCashConsensusParams::new(Network::Mainnet)).min_transaction_size(0), 0);

|

||||

assert_eq!(ConsensusFork::BitcoinCash(BitcoinCashConsensusParams::new(Network::Mainnet)).min_transaction_size(2000000000), 100);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_consensus_fork_max_block_sigops() {

|

||||

assert_eq!(ConsensusFork::BitcoinCore.max_block_sigops(0, 1_000_000), 20_000);

|

||||

|

|

|

|||

|

|

@ -4,6 +4,7 @@ extern crate lazy_static;

|

|||

extern crate chain;

|

||||

extern crate primitives;

|

||||

extern crate serialization;

|

||||

extern crate rustc_hex as hex;

|

||||

|

||||

mod consensus;

|

||||

mod deployments;

|

||||

|

|

@ -11,6 +12,6 @@ mod network;

|

|||

|

||||

pub use primitives::{hash, compact};

|

||||

|

||||

pub use consensus::{ConsensusParams, ConsensusFork, BitcoinCashConsensusParams, ZCashConsensusParams};

|

||||

pub use consensus::{ConsensusParams, ConsensusFork, BitcoinCashConsensusParams, ZCashConsensusParams, TransactionOrdering};

|

||||

pub use deployments::Deployment;

|

||||

pub use network::{Magic, Network};

|

||||

|

|

|

|||

|

|

@ -108,9 +108,9 @@ impl Network {

|

|||

(&ConsensusFork::ZCash(_), Network::Mainnet) | (&ConsensusFork::ZCash(_), Network::Other(_)) => {

|

||||

use serialization;

|

||||

use chain;

|

||||

use chain::hex::FromHex;

|

||||

use hex::FromHex;

|

||||

let origin = "040000000000000000000000000000000000000000000000000000000000000000000000db4d7a85b768123f1dff1d4c4cece70083b2d27e117b4ac2e31d087988a5eac4000000000000000000000000000000000000000000000000000000000000000090041358ffff071f5712000000000000000000000000000000000000000000000000000000000000fd4005000a889f00854b8665cd555f4656f68179d31ccadc1b1f7fb0952726313b16941da348284d67add4686121d4e3d930160c1348d8191c25f12b267a6a9c131b5031cbf8af1f79c9d513076a216ec87ed045fa966e01214ed83ca02dc1797270a454720d3206ac7d931a0a680c5c5e099057592570ca9bdf6058343958b31901fce1a15a4f38fd347750912e14004c73dfe588b903b6c03166582eeaf30529b14072a7b3079e3a684601b9b3024054201f7440b0ee9eb1a7120ff43f713735494aa27b1f8bab60d7f398bca14f6abb2adbf29b04099121438a7974b078a11635b594e9170f1086140b4173822dd697894483e1c6b4e8b8dcd5cb12ca4903bc61e108871d4d915a9093c18ac9b02b6716ce1013ca2c1174e319c1a570215bc9ab5f7564765f7be20524dc3fdf8aa356fd94d445e05ab165ad8bb4a0db096c097618c81098f91443c719416d39837af6de85015dca0de89462b1d8386758b2cf8a99e00953b308032ae44c35e05eb71842922eb69797f68813b59caf266cb6c213569ae3280505421a7e3a0a37fdf8e2ea354fc5422816655394a9454bac542a9298f176e211020d63dee6852c40de02267e2fc9d5e1ff2ad9309506f02a1a71a0501b16d0d36f70cdfd8de78116c0c506ee0b8ddfdeb561acadf31746b5a9dd32c21930884397fb1682164cb565cc14e089d66635a32618f7eb05fe05082b8a3fae620571660a6b89886eac53dec109d7cbb6930ca698a168f301a950be152da1be2b9e07516995e20baceebecb5579d7cdbc16d09f3a50cb3c7dffe33f26686d4ff3f8946ee6475e98cf7b3cf9062b6966e838f865ff3de5fb064a37a21da7bb8dfd2501a29e184f207caaba364f36f2329a77515dcb710e29ffbf73e2bbd773fab1f9a6b005567affff605c132e4e4dd69f36bd201005458cfbd2c658701eb2a700251cefd886b1e674ae816d3f719bac64be649c172ba27a4fd55947d95d53ba4cbc73de97b8af5ed4840b659370c556e7376457f51e5ebb66018849923db82c1c9a819f173cccdb8f3324b239609a300018d0fb094adf5bd7cbb3834c69e6d0b3798065c525b20f040e965e1a161af78ff7561cd874f5f1b75aa0bc77f720589e1b810f831eac5073e6dd46d00a2793f70f7427f0f798f2f53a67e615e65d356e66fe40609a958a05edb4c175bcc383ea0530e67ddbe479a898943c6e3074c6fcc252d6014de3a3d292b03f0d88d312fe221be7be7e3c59d07fa0f2f4029e364f1f355c5d01fa53770d0cd76d82bf7e60f6903bc1beb772e6fde4a70be51d9c7e03c8d6d8dfb361a234ba47c470fe630820bbd920715621b9fbedb49fcee165ead0875e6c2b1af16f50b5d6140cc981122fcbcf7c5a4e3772b3661b628e08380abc545957e59f634705b1bbde2f0b4e055a5ec5676d859be77e20962b645e051a880fddb0180b4555789e1f9344a436a84dc5579e2553f1e5fb0a599c137be36cabbed0319831fea3fddf94ddc7971e4bcf02cdc93294a9aab3e3b13e3b058235b4f4ec06ba4ceaa49d675b4ba80716f3bc6976b1fbf9c8bf1f3e3a4dc1cd83ef9cf816667fb94f1e923ff63fef072e6a19321e4812f96cb0ffa864da50ad74deb76917a336f31dce03ed5f0303aad5e6a83634f9fcc371096f8288b8f02ddded5ff1bb9d49331e4a84dbe1543164438fde9ad71dab024779dcdde0b6602b5ae0a6265c14b94edd83b37403f4b78fcd2ed555b596402c28ee81d87a909c4e8722b30c71ecdd861b05f61f8b1231795c76adba2fdefa451b283a5d527955b9f3de1b9828e7b2e74123dd47062ddcc09b05e7fa13cb2212a6fdbc65d7e852cec463ec6fd929f5b8483cf3052113b13dac91b69f49d1b7d1aec01c4a68e41ce1570101000000010000000000000000000000000000000000000000000000000000000000000000ffffffff4d04ffff071f0104455a6361736830623963346565663862376363343137656535303031653335303039383462366665613335363833613763616331343161303433633432303634383335643334ffffffff010000000000000000434104678afdb0fe5548271967f1a67130b7105cd6a828e03909a67962e0ea1f61deb649f6bc3f4cef38c4f35504e51ec112de5c384df7ba0b8d578a4c702b6bf11d5fac00000000";

|

||||

let origin = origin.from_hex().unwrap();

|

||||

let origin = origin.from_hex::<Vec<u8>>().unwrap();

|

||||

let genesis: chain::Block = serialization::deserialize_with_flags(&origin as &[u8], serialization::DESERIALIZE_ZCASH).unwrap();

|

||||

genesis

|

||||

},

|

||||

|

|

|

|||

|

|

@ -14,7 +14,7 @@ rand = "0.4"

|

|||

log = "0.4"

|

||||

abstract-ns = "0.3"

|

||||

ns-dns-tokio = "0.3"

|

||||

csv = "0.15"

|

||||

csv = "1"

|

||||

|

||||

primitives = { path = "../primitives" }

|

||||

bitcrypto = { path = "../crypto" }

|

||||

|

|

|

|||

|

|

@ -375,8 +375,9 @@ impl<T> NodeTable<T> where T: Time {

|

|||

|

||||

/// Save node table in csv format.

|

||||

pub fn save<W>(&self, write: W) -> Result<(), io::Error> where W: io::Write {

|

||||

let mut writer = csv::Writer::from_writer(write)

|

||||

.delimiter(b' ');

|

||||

let mut writer = csv::WriterBuilder::new()

|

||||

.delimiter(b' ')

|

||||

.from_writer(write);

|

||||

let iter = self.by_score.iter()

|

||||

.map(|node| &node.0)

|

||||

.take(1000);

|

||||

|

|

@ -385,7 +386,7 @@ impl<T> NodeTable<T> where T: Time {

|

|||

|

||||

for n in iter {

|

||||